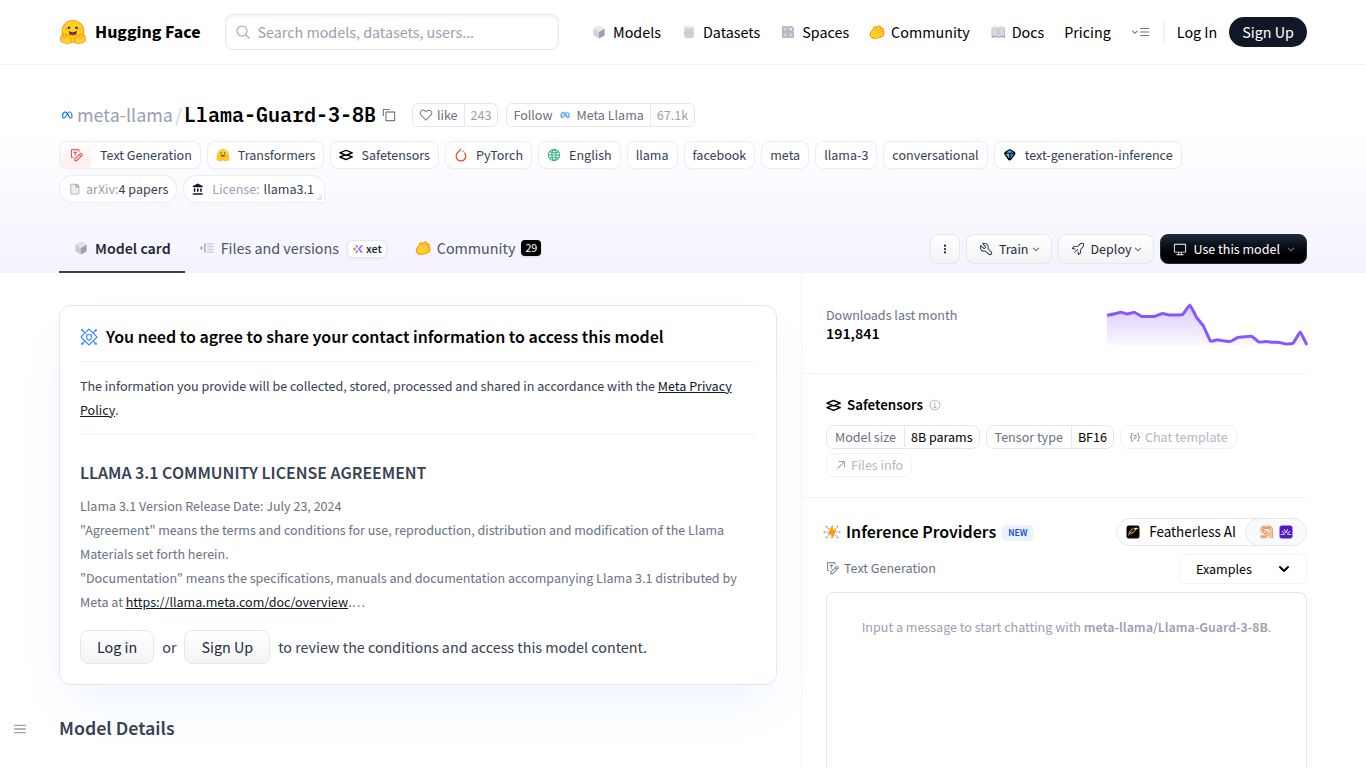

Meta Llama Guard 3: Your AI’s First Line of Defense for Safety and Trust

What is Meta Llama Guard 3?

In the rapidly evolving world of artificial intelligence, safety and responsibility are paramount. Enter Meta Llama Guard 3, a cutting-edge, open-source safety model developed by the AI pioneers at Meta. Think of it not as a content creator, but as a highly intelligent and vigilant security guard for your Large Language Models (LLMs). Its primary mission is to classify and filter content, ensuring that both the prompts fed into your AI and the responses it generates are safe, appropriate, and aligned with your ethical guidelines. By sitting between your users and your AI, Llama Guard 3 acts as an essential shield against harmful or unwanted interactions.

Core Capabilities: A Master of Textual Analysis

It’s important to understand that Meta Llama Guard 3 is a specialized tool. It does not generate images, videos, or audio. Its entire power is focused on one critical domain: text classification for safety. It analyzes text with incredible speed and nuance to determine its safety profile.

- Prompt Screening: Before a user’s prompt even reaches your main LLM, Llama Guard 3 can analyze it for malicious intent, policy violations, or harmful content, stopping potential issues at the source.

- Response Moderation: It also examines the output generated by your LLM to ensure it hasn’t produced unsafe, biased, or inappropriate content, providing a crucial final check before it’s displayed to the user.

- Detailed Harm Classification: It doesn’t just give a simple “safe” or “unsafe” verdict. It uses a sophisticated, hierarchical taxonomy to identify the specific type of potential harm, such as hate speech, violence, or self-harm, allowing for more granular control and reporting.

Key Features that Set Llama Guard 3 Apart

Llama Guard 3 isn’t just another filter; it’s a state-of-the-art solution packed with powerful features designed for modern AI applications.

- Advanced Hierarchical Taxonomy: This new classification system provides a much more detailed and accurate understanding of risk, moving beyond simple binary labels to give developers precise insights into content safety.

- Fully Open Source and Customizable: As an open-source model, developers have complete freedom to inspect, modify, and fine-tune Llama Guard 3 to fit their specific safety policies and use cases. This level of control is impossible with closed-API solutions.

- High Efficiency: Built on an 8-billion parameter model, Llama Guard 3 is designed to be computationally efficient, allowing for fast, low-latency performance that won’t create a bottleneck in your application’s user experience.

- Dual-Purpose Design: It’s engineered to flawlessly handle both prompt and response analysis within a single model, simplifying your safety pipeline and reducing operational complexity.

Pricing: The Power of Open Source

One of the most compelling aspects of Meta Llama Guard 3 is its pricing model. The model itself is completely free to download and use, courtesy of Meta’s commitment to the open-source community. Your only costs will be associated with the compute resources (i.e., server hosting and processing power) required to run the model for inference in your own environment. This makes it an incredibly cost-effective solution, especially for startups and researchers, eliminating the per-transaction fees common with proprietary APIs.

Who is Llama Guard 3 For?

This tool is essential for anyone building, deploying, or managing applications that leverage large language models. The primary users include:

- AI Developers and Engineers: Those who are building chatbots, content generation tools, or any LLM-powered application and need a robust, customizable safety layer.

- Platform and Product Managers: Individuals responsible for user safety and trust on platforms that integrate generative AI, ensuring a positive and secure user experience.

- Enterprise Teams: Businesses deploying internal or external AI tools that must adhere to strict compliance, brand safety, and ethical AI standards.

- AI Safety Researchers: Academics and researchers exploring the frontiers of AI safety and alignment, who can use Llama Guard 3 as a powerful baseline for their work.

Alternatives and Comparisons

While Llama Guard 3 is a formidable tool, it’s helpful to know how it stacks up against other options in the AI safety landscape.

Llama Guard 3 vs. OpenAI Moderation API

The main difference is philosophy and control. The OpenAI Moderation API is a powerful, easy-to-use, and proprietary solution with a pay-per-use model. It’s great for quick integration. Llama Guard 3, on the other hand, is open source. This gives you unparalleled control, customizability, and data privacy, as everything runs on your own infrastructure. For teams that need to fine-tune safety policies or avoid vendor lock-in, Llama Guard 3 is the superior choice.

Llama Guard 3 vs. Other Open-Source Models

Within the open-source community, Llama Guard 3 stands out due to its backing by Meta, which ensures a high level of performance, extensive training, and a thoughtfully designed taxonomy. Its 8B parameter size strikes an excellent balance between capability and efficiency, making it one of the most practical and powerful open-source safety models available today. For developers seeking a reliable, SOTA (state-of-the-art) open-source solution, Llama Guard 3 is the new benchmark.

data statistics

Relevant Navigation

Robust Intelligence AI Firewall

Protect AI ModelScan

OpenAI Moderation API (omni-moderation-latest)

Microsoft Community Sift (Two Hat)

Jigsaw Perspective API

Azure AI Content Safety

Lasso Moderation