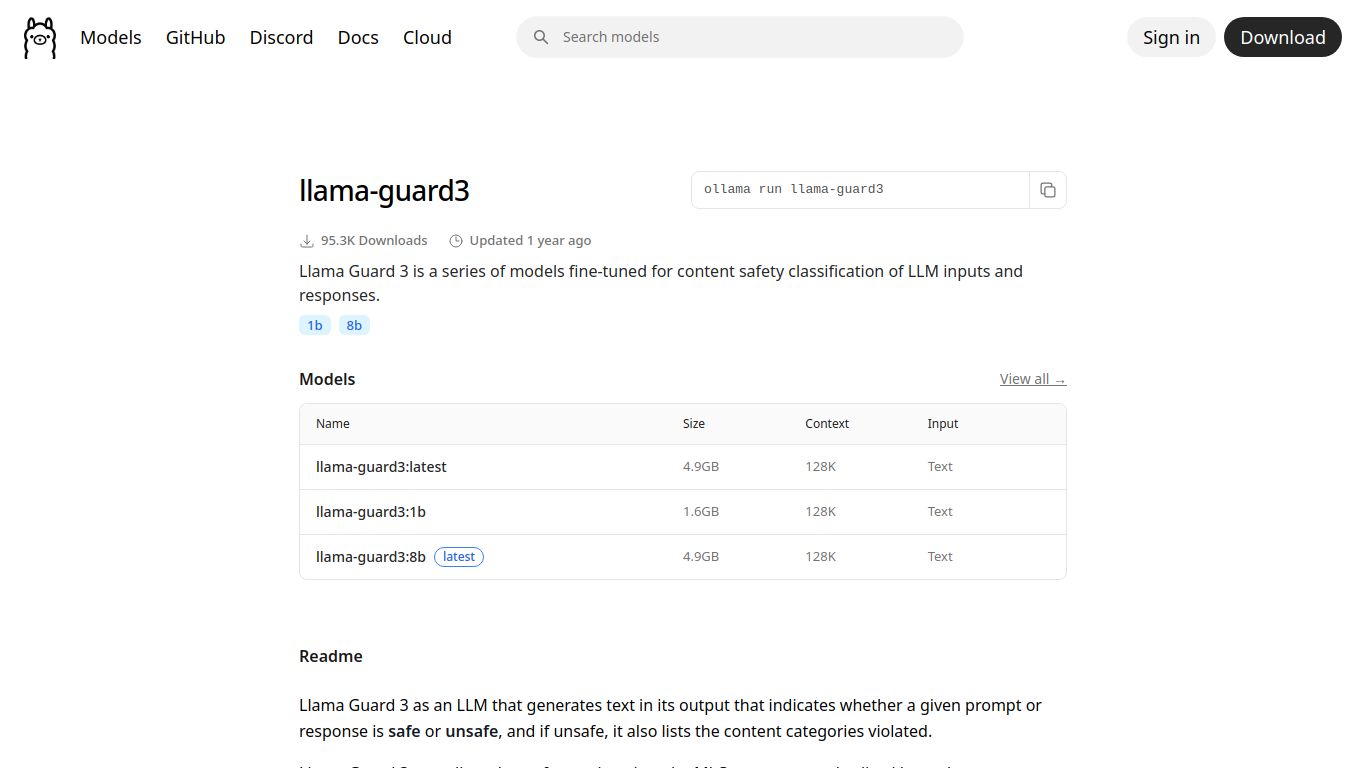

Ollama + Llama Guard 3: Your Personal AI Safety Net, Running Locally

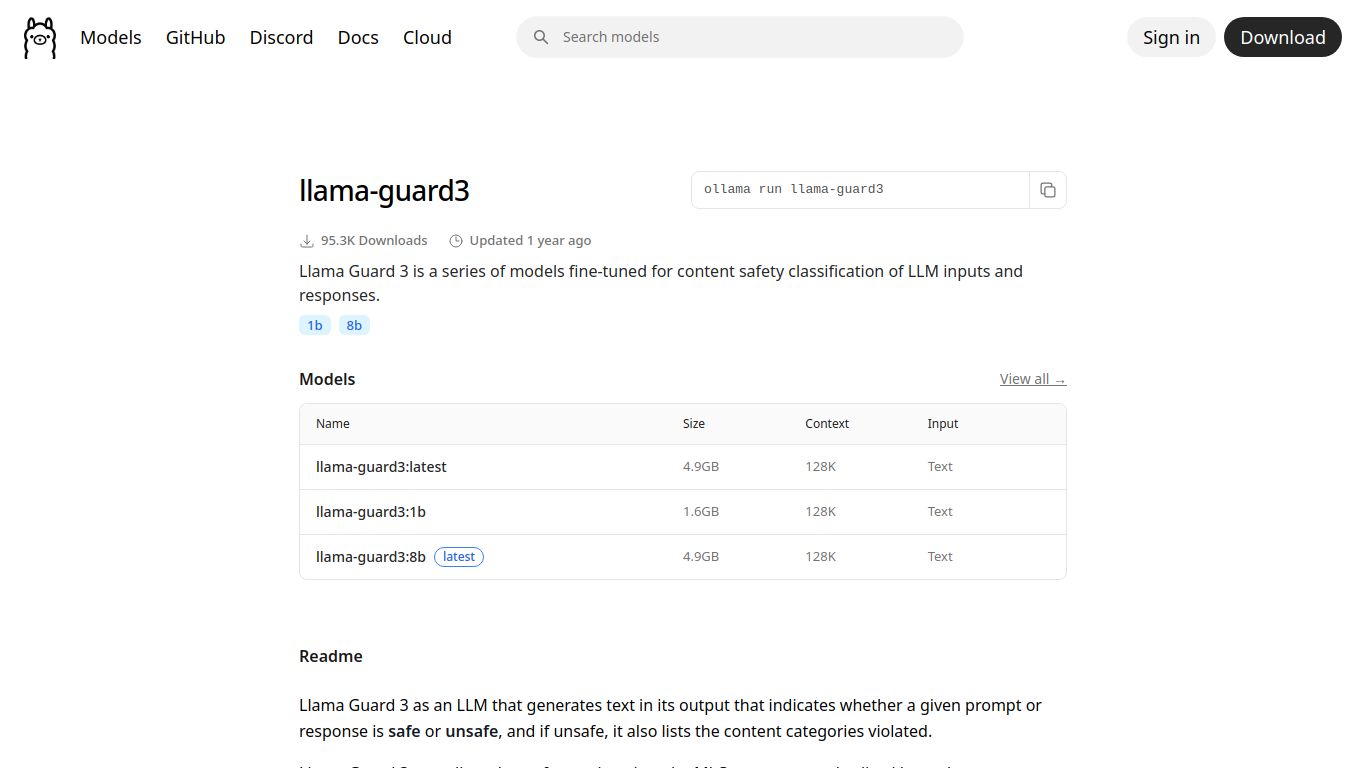

In a world increasingly reliant on AI-powered conversations, ensuring safety and appropriateness is paramount. Enter the powerful duo of Ollama + Llama Guard 3, a game-changing combination that puts a state-of-the-art AI safety model right on your local machine. Developed by Meta AI, Llama Guard 3 is designed to be a robust safeguard, classifying content in LLM prompts and responses to prevent the generation of potentially unsafe or harmful outputs. By running it through Ollama, the popular platform for deploying and managing LLMs locally, you gain unprecedented control, privacy, and customization over your AI safety protocols without ever sending your data to the cloud.

What Can It Actually Do? A Deep Dive into Capabilities

Unlike generative AI tools, Llama Guard 3 doesn’t create images, text, or video. Instead, its sole, highly-specialized capability is AI Content Moderation. Think of it as a highly intelligent security guard for your AI applications. It meticulously analyzes text to identify and flag content based on a comprehensive safety taxonomy. Its core functions include:

- Prompt & Response Auditing: It can screen both the user’s input (prompt) and the AI’s generated output (response) to ensure the entire conversation remains within safe boundaries.

- Harmful Content Detection: Llama Guard 3 is trained to spot a wide array of problematic content, including hate speech, harassment, violence, self-harm, and sexually explicit material.

- Policy Adherence: It helps enforce specific content policies, making it invaluable for building applications that align with community guidelines or brand safety standards.

- Risk Scoring: The model provides detailed classifications and confidence scores, allowing developers to set custom thresholds for what is considered safe or unsafe for their specific use case.

Core Features That Set It Apart

Running Llama Guard 3 with Ollama isn’t just about safety; it’s about smart, private, and flexible safety. Here are the standout features:

100% Local & Private: The entire process happens on your hardware. Your prompts and data are never sent to a third-party server, ensuring maximum confidentiality and data security.

Completely Open Source: Both Ollama and Llama Guard 3 are open source, offering full transparency and the freedom to inspect, modify, and customize the models to fit your unique needs.

Seamless Ollama Integration: Setting up is a breeze. If you’re already using Ollama, adding Llama Guard 3 is as simple as running a single command, making it incredibly accessible for developers and enthusiasts alike.

Cost-Effective: Eliminate expensive, recurring API fees for content moderation. The only cost is the hardware you already own, making it a budget-friendly solution for startups and indie developers.

State-of-the-Art Taxonomy: Built on Meta AI’s latest research, Llama Guard 3 employs a sophisticated and nuanced taxonomy for classifying harmful content, providing more accurate and reliable results than many older models.

Let’s Talk Money: The Pricing Plan

This is the best part. The pricing for Ollama + Llama Guard 3 is refreshingly simple:

It’s Completely Free.

Yes, you read that right. Both Ollama and the Llama Guard 3 model are open-source and free to use for both research and commercial purposes (subject to their respective licenses). There are no subscription plans, no per-request fees, and no hidden costs. Your only investment is in the computational hardware (like a decent GPU) required to run the model locally.

Who Is This For? The Ideal User Profile

This local AI safety solution is perfect for a diverse range of users who prioritize privacy, control, and cost-effectiveness:

- AI Application Developers: Individuals and teams building chatbots, content creation tools, or any LLM-powered application who need a reliable, built-in safety layer.

- Indie Hackers & Startups: Entrepreneurs who need to implement robust content moderation without the high costs of cloud-based API solutions.

- AI Researchers & Academics: Professionals studying AI safety, alignment, and ethics who require an open and inspectable model for their experiments.

- Privacy Advocates: Anyone developing applications that handle sensitive or personal information and cannot risk sending data to external services.

- AI Hobbyists: Enthusiasts who want to explore the cutting edge of AI safety and run powerful models on their personal computers.

How Does It Stack Up? Alternatives & Comparison

While Llama Guard 3 on Ollama is a fantastic choice, it’s helpful to see how it compares to cloud-based alternatives. The primary distinction is Local vs. Cloud API.

| Feature | Ollama + Llama Guard 3 (Local) | Cloud APIs (e.g., OpenAI Moderation, Google Perspective) |

|---|---|---|

| Pricing | Free (requires user’s hardware) | Pay-per-use (can become expensive at scale) |

| Privacy | Maximum Privacy. Data never leaves your machine. | Data is sent to a third-party server for processing. |

| Customization | Highly Customizable. Can be fine-tuned for specific policies. | Limited to the provider’s pre-defined categories and rules. |

| Control & Ownership | Full Control. You own the entire pipeline. | Dependent on the provider’s availability, terms of service, and potential policy changes. |

| Setup Complexity | Requires hardware setup and command-line knowledge. | Very easy to integrate with a simple API call. |

In summary, if your priority is absolute privacy, zero operational cost, and full control over your safety model, the Ollama + Llama Guard 3 combination is an unbeatable choice. For those who prefer a quicker, maintenance-free setup and are comfortable with the associated costs and data-sharing policies, cloud-based APIs remain a viable alternative.

data statistics

Relevant Navigation

LobeChat

KoboldAI

H2O GPT

IBM Watson Speech to Text

Open Interpreter

Ollama + Open WebUI (Bundle)

Tabnine