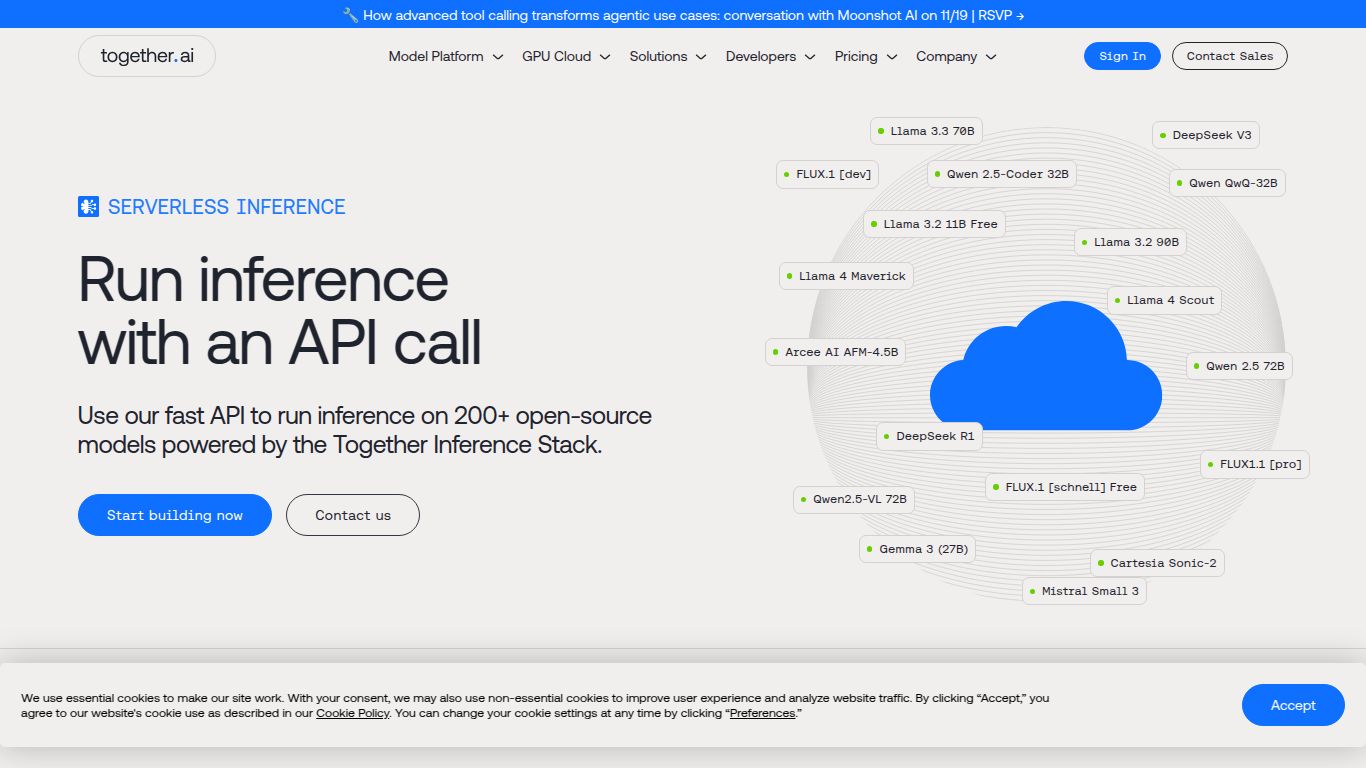

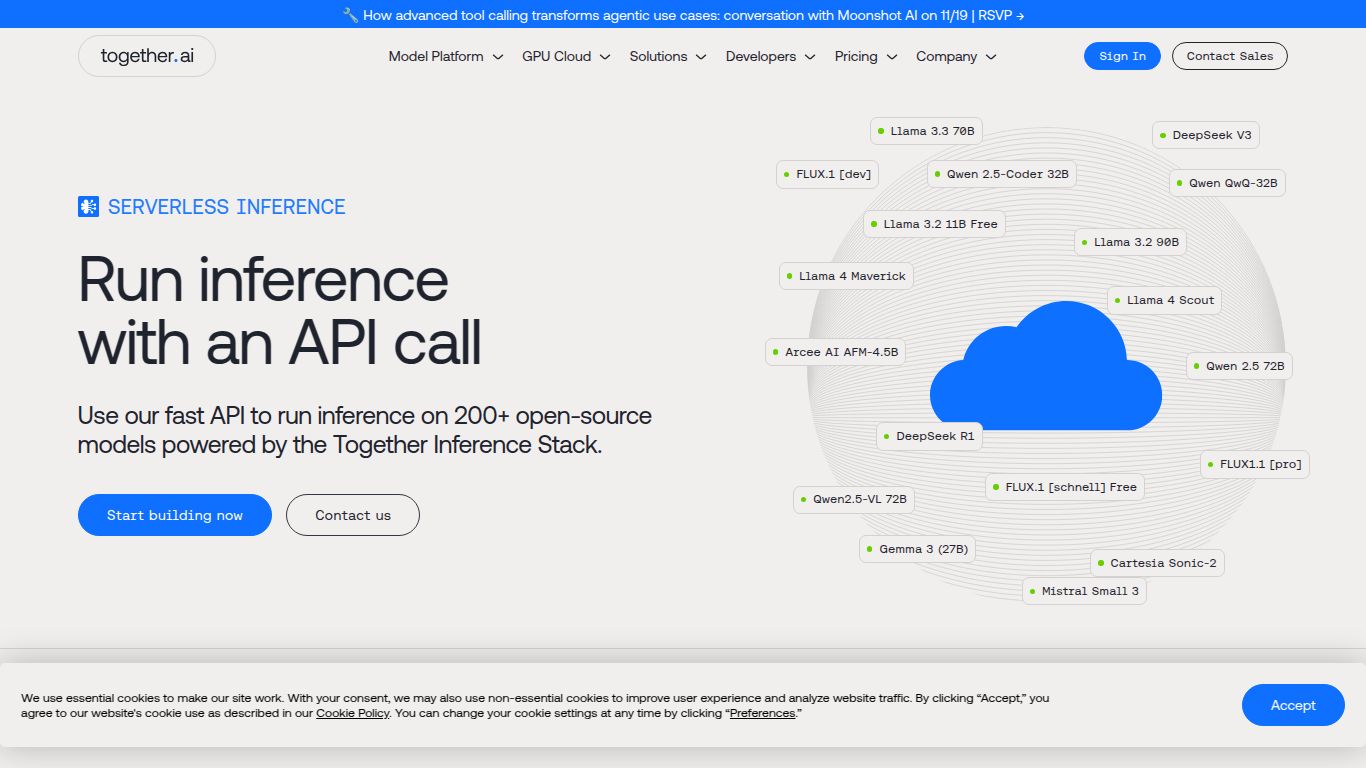

Together AI Inference: Your Supercharged Engine for Open-Source AI

In the rapidly evolving world of artificial intelligence, developers and businesses are constantly seeking faster, more affordable, and more flexible ways to deploy cutting-edge models. Enter Together AI Inference, a revolutionary cloud platform developed by Together AI. It is expertly designed to serve as the ultimate infrastructure for running and fine-tuning a vast array of open-source generative AI models. Forget the complexities of managing GPUs and server infrastructure; Together AI provides a seamless, high-performance playground for building the next generation of AI-powered applications.

What Can You Build with Together AI?

Together AI Inference is a versatile powerhouse, offering access to models that span multiple modalities. Whether you’re creating a chatbot, generating stunning visuals, or writing complex code, this platform has you covered.

- Text Generation: This is the core strength of the platform. You gain access to a massive library of leading Large Language Models (LLMs) like Llama 3, Mixtral, and Falcon. Use them for everything from advanced chatbots and content creation to complex summarization and data analysis.

- Code Generation: Supercharge your development workflow with specialized coding models. These AI assistants can help you write, debug, and optimize code in various programming languages, dramatically boosting productivity.

- Image Generation: Tap into the creative potential of text-to-image models like Stable Diffusion. Generate unique, high-quality images for marketing, art, design, or any other visual project simply by describing what you want to see.

- Embeddings: Build powerful semantic search, recommendation engines, or classification systems using state-of-the-art embedding models that transform text into meaningful numerical representations.

Core Features That Set It Apart

Together AI isn’t just another model hosting service. It’s an entire ecosystem built for performance, efficiency, and developer happiness.

- Blazing-Fast Inference: The platform is built on a custom-engineered stack that delivers industry-leading speeds. Your users get near-instant responses, creating a smooth and engaging experience.

- The Open-Source Model Hub: Why be locked into one ecosystem? Together AI offers an unparalleled selection of the best open-source models, giving you the freedom to choose the perfect tool for your specific task and budget.

- Effortless Serverless API: Get started in minutes with a simple, pay-as-you-go API. It automatically scales to handle any workload, from a handful of requests to millions, so you never have to worry about provisioning or managing servers.

- Dedicated Instances: For high-volume, mission-critical applications, you can reserve dedicated instances for guaranteed capacity, consistent performance, and even lower costs at scale.

- Seamless Fine-Tuning: Go beyond pre-trained models. Together AI provides powerful and easy-to-use tools to fine-tune models on your own data, creating custom AI that understands your unique domain and voice.

Pricing: Power Meets Affordability

Together AI is committed to making AI accessible and is renowned for its highly competitive and transparent pricing. There are no complex contracts or hidden fees—just straightforward, usage-based billing.

- Pay-As-You-Go: The primary model is beautifully simple. For text models, you pay per million tokens processed (both input and output). For image models, you are typically billed per second of GPU usage. This ensures you only ever pay for what you actually use.

- Unbeatable Rates: The platform consistently offers some of the lowest rates in the market for top-tier open-source models. For example, running a powerful model like Llama-3-70B can be significantly more cost-effective than on competing platforms or proprietary APIs.

- Dedicated Instance Pricing: For predictable, high-throughput needs, dedicated instances are available at a flat hourly rate, providing the best possible performance at a fixed cost.

Who is Together AI Inference For?

This platform is a fantastic choice for anyone building with generative AI, from individual hobbyists to large-scale enterprises.

- AI Developers & Engineers: The core audience. If you’re building AI features into an application, the robust API, speed, and model variety are a dream come true.

- Startups and SMBs: Access world-class AI infrastructure without the massive upfront investment or the need for a dedicated MLOps team.

- Researchers and Academics: Experiment with a wide range of open-source models for research projects without breaking the budget.

- AI Enthusiasts and Hobbyists: The low barrier to entry and generous free credits make it easy to start exploring and building cool projects.

Alternatives & Comparison

How does Together AI stack up against the competition? Here’s a quick look:

- vs. Replicate: Replicate is another excellent platform known for its ease of use and vast library of community-contributed models. Together AI often competes heavily on speed and cost, particularly for popular LLMs, positioning itself as a more performance-oriented choice.

- vs. Anyscale/Perplexity: These platforms also offer optimized inference for open-source models. Together AI distinguishes itself with its aggressive pricing and a strong focus on providing the absolute fastest inference stack available on the market.

- vs. Groq: Groq is famous for its mind-boggling speed, thanks to its custom LPU hardware. However, its model selection is currently more limited. Together AI offers a much broader range of models while still delivering top-tier performance.

- vs. Proprietary APIs (OpenAI, Anthropic): The key difference is philosophy. While proprietary APIs offer highly capable but closed-source models, Together AI champions the open-source movement, giving developers more control, transparency, and the ability to fine-tune models for their specific needs.

data statistics

Relevant Navigation

WellSaid Labs — Enterprise TTS

Pygmalion Chat

LibreTranslate

Tavus — Personalized AI Humans

Exa

Amazon Bedrock Model Catalog

MultiOn