SmolLM2: The Power of AI in a Pocket-Sized Package

In a world where AI models are often gigantic and resource-hungry, SmolLM2 by Hugging Face emerges as a breath of fresh air. This isn’t your typical behemoth language model; it’s a compact, efficient, and incredibly versatile tool designed for speed and accessibility. Developed by the AI community leaders at Hugging Face, SmolLM2 is a small language model (hence, “Smol”) that packs a surprising punch. It’s built to run efficiently on standard hardware, making it a perfect choice for developers, researchers, and hobbyists who need powerful AI capabilities without the need for a supercomputer. It’s all about democratizing AI, putting the power of language generation into everyone’s hands.

What Can SmolLM2 Do?

SmolLM2 is a master of text, but it’s important to understand its specific domain. As a language model, its capabilities are laser-focused on processing and generating text-based content. It’s designed to be a nimble building block for a variety of applications.

- Text Generation: From crafting short stories and blog post drafts to generating creative product descriptions, SmolLM2 can produce coherent and contextually relevant text on demand.

- Simple Q&A: It can function as a lightweight question-answering system, perfect for integrating into chatbots or internal knowledge bases.

- Summarization: Feed it a piece of text, and it can provide a concise summary, helping you digest information quickly.

- Code Snippets: While not a full-fledged coding assistant, it’s capable of generating simple code snippets and helping with boilerplate code in various programming languages.

Importantly, as a pure language model, SmolLM2 does not have capabilities for image generation, video creation, or audio processing. Its strength lies entirely within the realm of text.

Core Features: Why Choose Smol?

What truly sets SmolLM2 apart are its unique features, which are all centered around efficiency and accessibility.

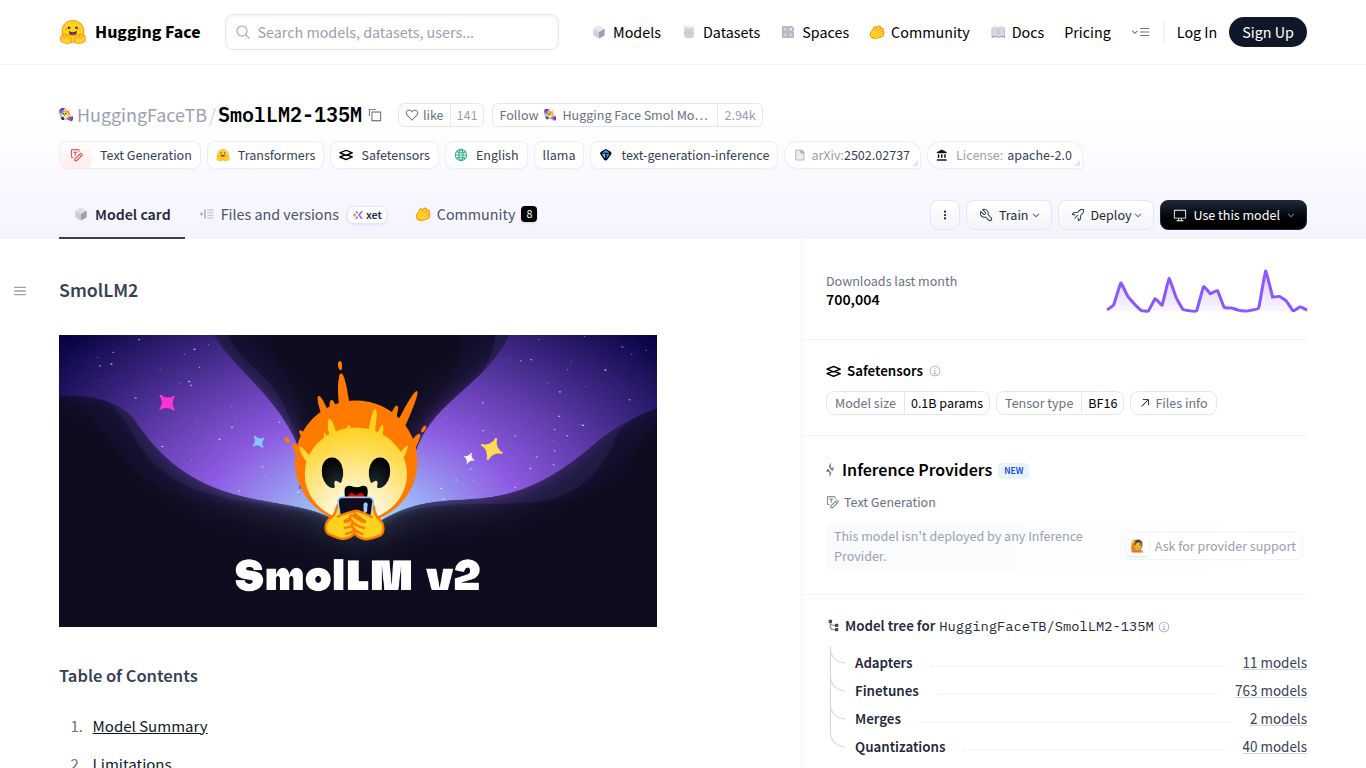

- Ultra-Lightweight: With only 135 million parameters, this model is tiny compared to multi-billion parameter giants. This means it requires significantly less memory and storage.

- Blazing-Fast Inference: Its small size translates to incredible speed. Get responses and generate text almost instantaneously, ideal for real-time applications.

- Runs Anywhere: Forget expensive cloud GPUs. SmolLM2 is designed to run efficiently on consumer-grade CPUs and GPUs, and even on edge devices, enabling offline and private AI applications.

- Highly Customizable: Being an open-source model, developers can easily fine-tune SmolLM2 on their own datasets to create specialized models for specific tasks with minimal computational cost.

- Open Source & Free: It’s completely free to use, modify, and distribute, making it a cost-effective solution for startups, students, and independent projects.

Pricing: Absolutely Free!

This section is simple. SmolLM2 is 100% free. As an open-source model hosted on Hugging Face, there are no subscription plans, no API fees, and no hidden costs associated with the model itself. You can download it and use it for commercial or personal projects without any charge. The only cost you’ll ever incur is the electricity or cloud compute resources required to run it on your own hardware.

Who is SmolLM2 For?

SmolLM2 is the perfect fit for a specific set of users who value efficiency, control, and low cost over raw power.

- AI/ML Developers: Ideal for prototyping applications, building lightweight AI features, and running models in resource-constrained environments.

- Researchers & Academics: A great tool for conducting experiments in natural language processing without the need for massive computational grants.

- Students & Hobbyists: A fantastic entry point into the world of large language models, allowing for hands-on experience on a personal computer.

- Indie Hackers & Startups: Enables the creation of AI-powered products with minimal upfront investment and operational costs, ensuring user data can remain private by running locally.

Alternatives & Comparison

While SmolLM2 is a fantastic tool, it’s part of a growing ecosystem of small language models. Here’s how it stacks up:

SmolLM2 vs. Phi-3 Mini

Microsoft’s Phi-3 Mini is a major competitor known for its impressive performance despite its small size. Phi-3 Mini often provides more capable and coherent outputs. However, SmolLM2 is even smaller and lighter, making it the superior choice for extremely constrained environments like mobile devices or microcontrollers where every megabyte counts.

SmolLM2 vs. Google’s Gemma (2B)

Google’s Gemma models (like the 2-billion parameter version) are also strong open-source contenders. Gemma 2B is more powerful and knowledgeable than SmolLM2, but it also has higher hardware requirements. Choose SmolLM2 when your absolute priority is minimal resource footprint and maximum speed over a large knowledge base.

SmolLM2 vs. TinyLlama

TinyLlama is another project focused on creating compact yet capable models. Both projects share a similar philosophy. The choice between them often comes down to specific benchmark performance on your target task. SmolLM2, backed by Hugging Face, offers seamless integration with the vast Transformers ecosystem, which can be a significant advantage for development.

data statistics

Relevant Navigation

Mixtral 8x22B (Mistral)

Yi-1.5

DBRX Instruct (HF)

Llama 3.2 (Meta)

Phi-4 Reasoning

Falcon 2 11B

MiniCPM-V 2.6