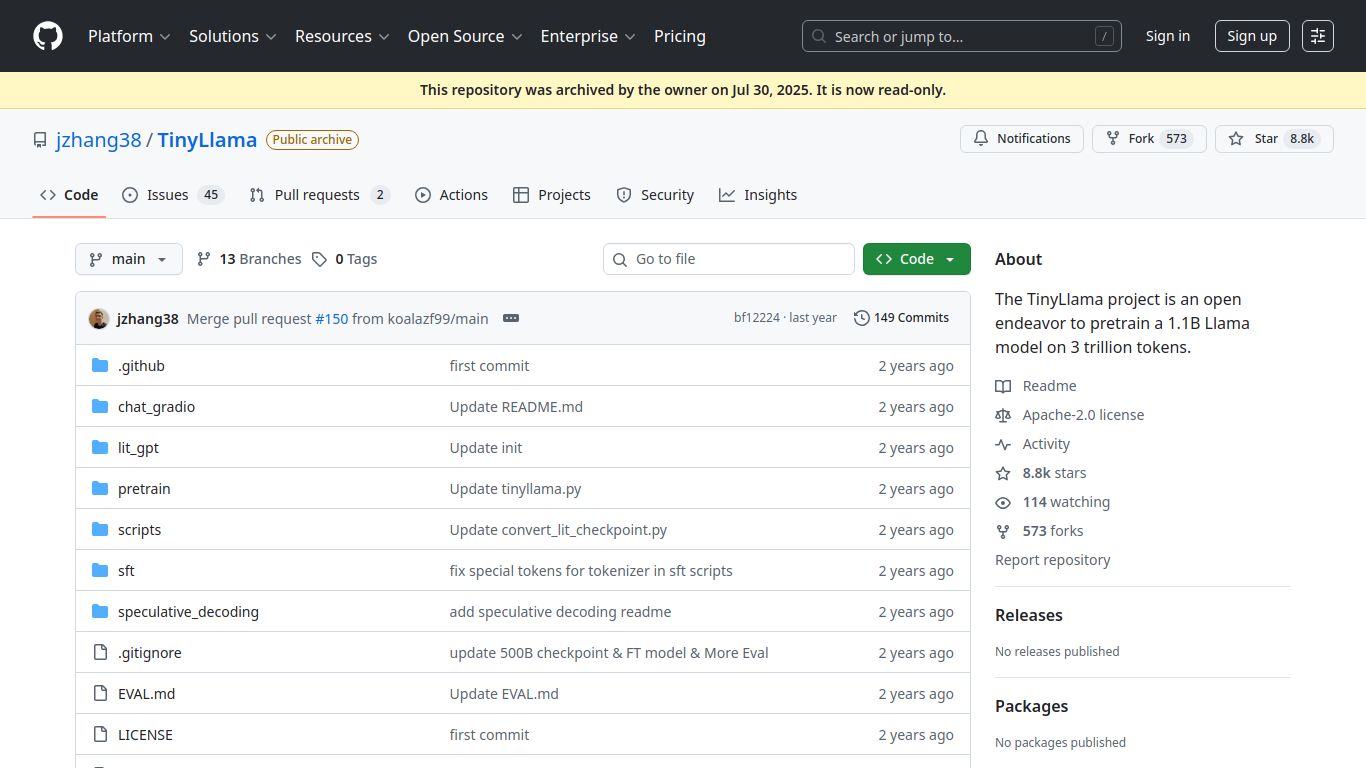

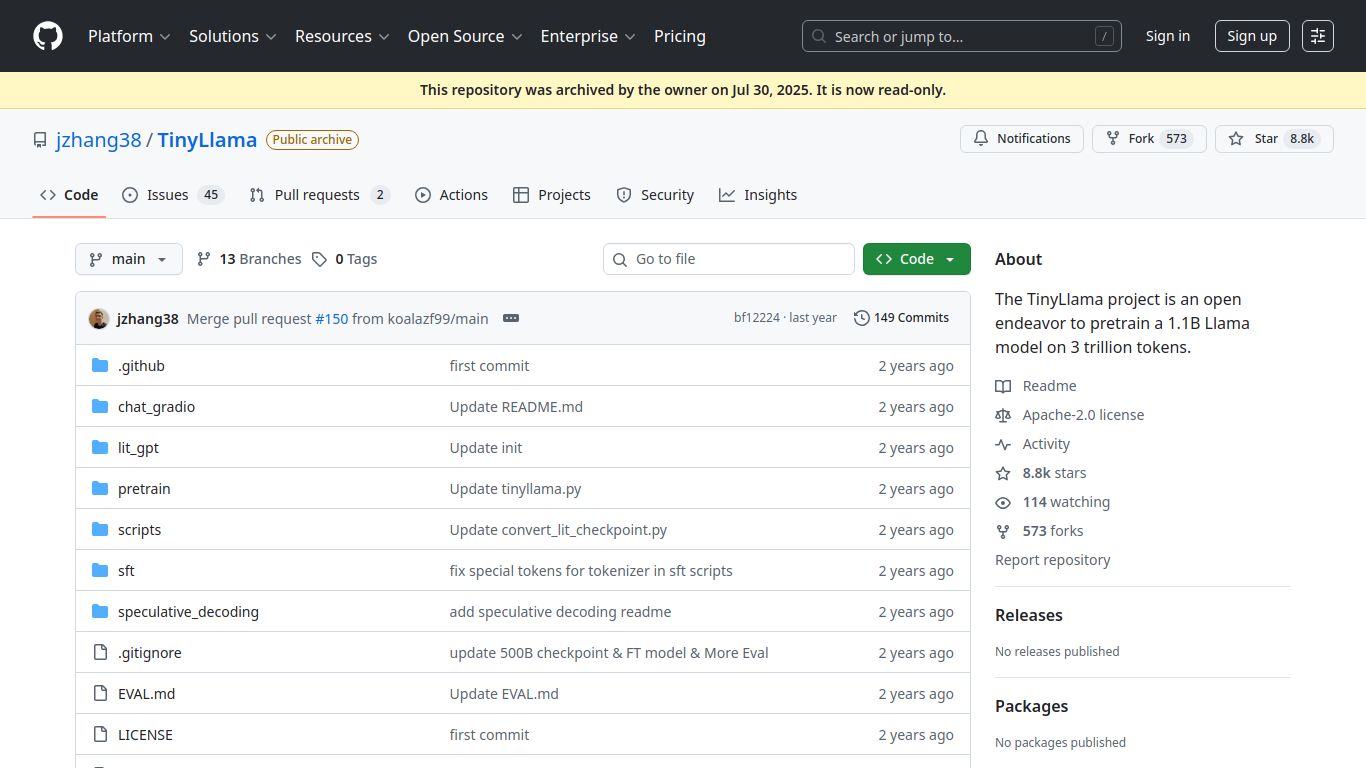

TinyLlama 1.1B: The Pocket-Sized Language Model Powerhouse

Welcome to the next wave of AI accessibility! TinyLlama 1.1B, a groundbreaking open-source project, is here to prove that powerful language models don’t need to live in massive data centers. Developed by a dedicated research team, TinyLlama is a compact 1.1 billion parameter model designed to bring the magic of large language models (LLMs) to resource-constrained environments. It’s a lean, mean, text-generating machine, meticulously trained on an astounding 3 trillion tokens to deliver impressive performance in a remarkably small package. This project democratizes AI, making it possible for developers, hobbyists, and researchers to run a capable LLM on consumer-grade hardware.

Capabilities: Focused on Textual Excellence

It’s important to understand where TinyLlama shines. This model is a specialist, focusing exclusively on the vast world of text. It does not generate images, audio, or video. Instead, it channels all its power into linguistic tasks, offering strong capabilities in:

- Text Generation: From writing creative stories and composing emails to generating marketing copy, TinyLlama can produce coherent and contextually relevant text.

- Conversational AI: Build lightweight chatbots and virtual assistants that can run locally without constant cloud connectivity.

- Code Assistance: It can help developers by generating code snippets, explaining programming concepts, and assisting with debugging in various languages.

- Summarization & Q&A: Condense long documents into key points or ask direct questions about a piece of text to get concise answers.

- Language Understanding: Use it for tasks like sentiment analysis, keyword extraction, and other natural language processing (NLP) applications.

Core Features: Why Choose TinyLlama?

TinyLlama isn’t just another language model; its design philosophy offers a unique set of advantages that make it stand out from the crowd.

- Extremely Compact: With only 1.1 billion parameters, it has a tiny memory footprint (requiring just ~553MB of RAM), allowing it to run smoothly on laptops, single-board computers like the Raspberry Pi, and even some modern smartphones.

- Fully Open-Source: Built on the Llama 2 architecture and released under the permissive Apache 2.0 license, TinyLlama is completely free for both academic and commercial use. This encourages innovation and allows anyone to build upon it.

- Impressive Performance: Despite its small size, it punches well above its weight, showing remarkable reasoning and comprehension abilities that compete with much larger models on various benchmarks.

- Efficiency and Speed: Its small size translates to significantly faster inference speeds and lower computational costs compared to giant models, making it ideal for real-time applications.

- Fine-Tunable: Developers can easily fine-tune the base model on their own datasets to create specialized versions tailored to specific tasks or industries.

Pricing: The Best Price is Free

Here’s the simple and beautiful part: TinyLlama 1.1B is completely free. As an open-source project, there are no subscription plans, no usage tiers, and no hidden fees.

- Base Model: Free to download, modify, and deploy.

- Commercial Use: Permitted under the Apache 2.0 license, giving you the freedom to build and sell products powered by it.

Your only cost is the hardware you choose to run it on. By eliminating expensive API calls to cloud-based services, TinyLlama can dramatically lower the operational costs of developing and deploying AI-powered applications.

Who is TinyLlama 1.1B For?

This model’s accessibility makes it a perfect fit for a diverse range of users who were previously locked out of the LLM space due to high computational or financial barriers.

- AI Hobbyists & Enthusiasts: Anyone who wants to experiment with a powerful language model on their personal computer without needing an enterprise-grade GPU.

- Developers & Startups: Teams building AI features into applications where efficiency, low latency, and offline capability are critical. Perfect for edge computing and on-device AI.

- Students & Educators: An invaluable tool for learning about the architecture, training, and deployment of large language models in a hands-on, low-cost environment.

- Researchers: A fantastic, lightweight base model for conducting research in areas like model optimization, quantization, and efficient AI.

Alternatives & Comparison

How does TinyLlama 1.1B stack up against the competition? It’s all about trade-offs between size, performance, and accessibility.

Against Other Small Models (e.g., Phi-2, Pythia)

TinyLlama holds a strong position in the small model arena. It is often compared to Microsoft’s Phi-2 (a 2.7B model). While Phi-2 may have an edge in certain reasoning tasks due to its size and specialized training data, TinyLlama’s key advantage is its even smaller footprint and fully permissive license, making it more flexible for a wider range of commercial applications.

Against Larger Models (e.g., Mistral 7B, Llama 2 7B)

This isn’t a fair fight, nor is it meant to be. Larger models like Mistral 7B will undoubtedly provide more nuanced, accurate, and creative responses. However, they require significantly more VRAM and computational power, putting them out of reach for on-device applications or users with standard hardware. TinyLlama’s value proposition is not to outperform these giants, but to provide “good enough” or even great performance at a fraction of the resource cost. It’s the difference between needing a dedicated server and being able to run AI in your pocket.

data statistics

Relevant Navigation

KoboldCpp

Open Interpreter

GPT4All (Desktop)

Open WebUI

OLMo 2 (AI2)

RisuAI

Apple OpenELM