RWKV-7 (family): The Next-Gen Open-Source AI That Thinks Like an RNN

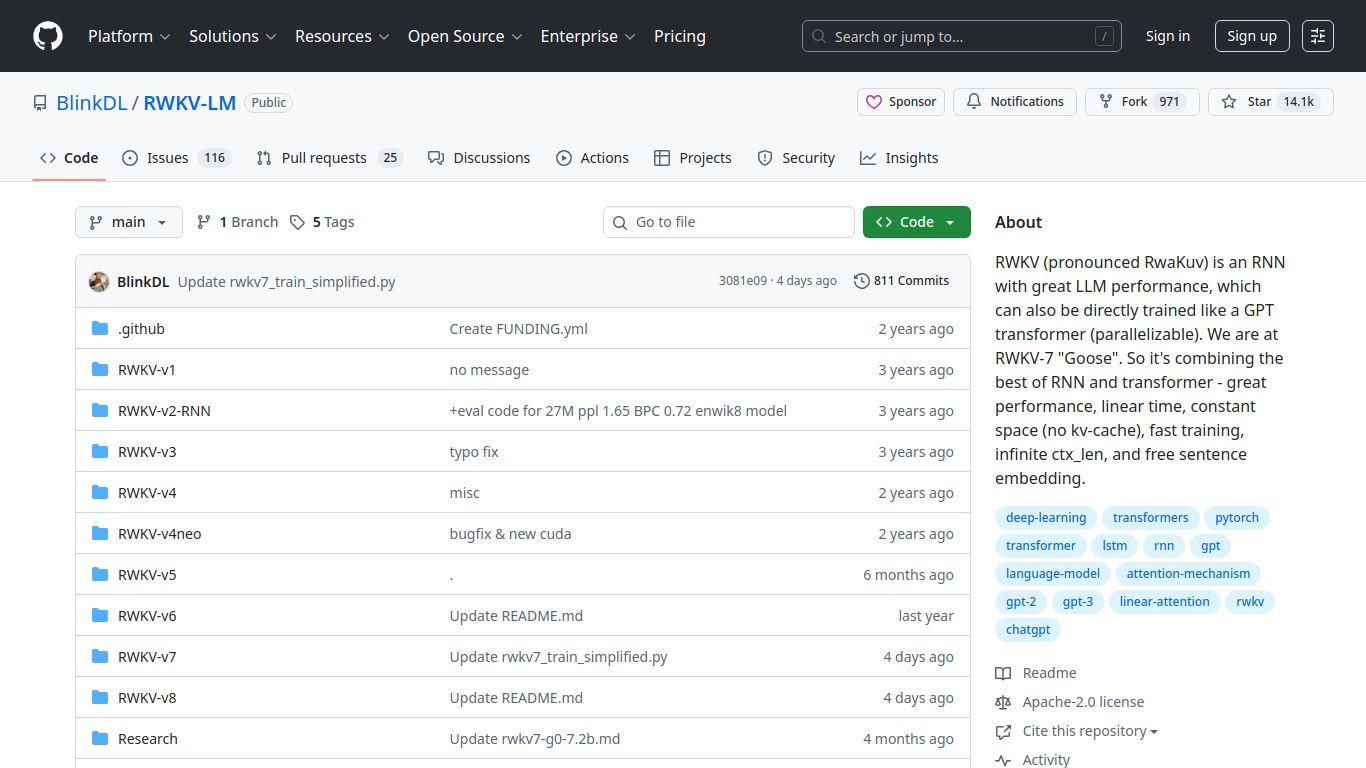

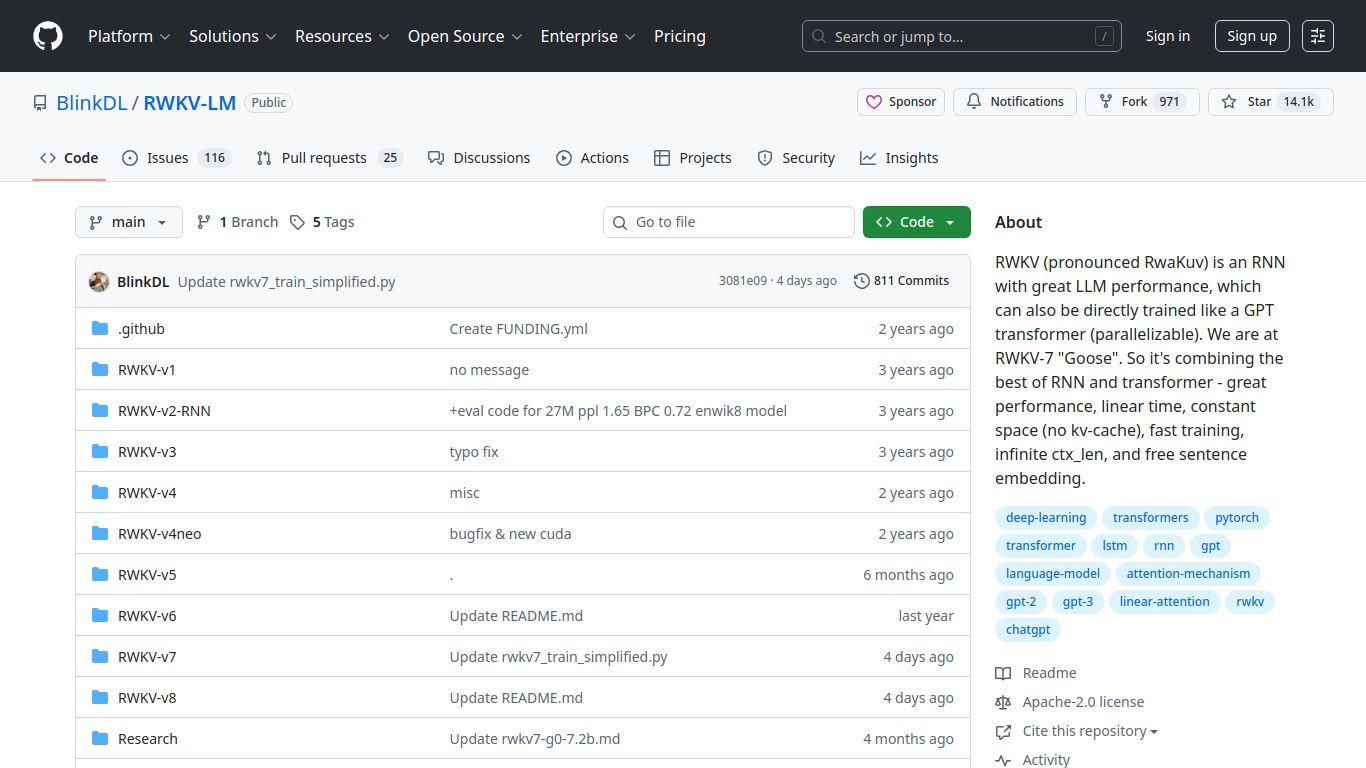

Forget everything you thought you knew about the limitations of Large Language Models. Step into the world of RWKV-7 (family), a groundbreaking open-source project spearheaded by BlinkDL. This isn’t just another GPT clone; it’s a fundamental reimagining of AI architecture. RWKV, which stands for Receptance Weighted Key Value, masterfully blends the parallel processing power of Transformers with the incredible efficiency and long-context memory of RNNs. The result is a lightning-fast, resource-friendly powerhouse that’s democratizing access to high-performance AI for everyone.

Core Capabilities: Master of Language and Code

The RWKV-7 family is laser-focused on textual and logical reasoning, making it a virtuoso in its domain. While it doesn’t natively generate images or videos, its linguistic prowess is second to none. Its core capabilities include:

- Sophisticated Text Generation: From writing creative stories and composing insightful articles to drafting professional emails, RWKV produces fluent, coherent, and context-aware text.

- Powerful Code Generation: Developers can leverage RWKV to write, debug, and explain code snippets across a multitude of programming languages, significantly speeding up development workflows.

- Intelligent Chat & Conversational AI: Build highly responsive and engaging chatbots and virtual assistants that can remember past interactions for more natural, human-like conversations.

- Complex Problem Solving: Use it for summarization, translation, question-answering, and other complex NLP tasks with remarkable accuracy and speed.

Unpacking the Unique Features of RWKV

What truly sets RWKV apart from the crowd is its revolutionary architecture. It’s not just an improvement; it’s an innovation.

- Transformer-Free Design: By ditching the computationally expensive “attention” mechanism of traditional Transformers, RWKV achieves blistering inference speeds and requires a fraction of the VRAM.

- RNN Efficiency: It operates like an RNN during inference, meaning its performance doesn’t degrade as the context gets longer. It can theoretically handle an infinite context length without running out of memory, a feat impossible for standard Transformers.

- Green & Eco-Friendly AI: Its computational efficiency translates directly to lower energy consumption, making it a more sustainable and environmentally friendly choice for AI development.

- Completely Open Source: With its Apache 2.0 license, RWKV offers complete transparency. You can inspect the code, fine-tune the models on your own data, and deploy it anywhere without restrictions.

- Scalable Performance: The RWKV-7 family includes models of various sizes (e.g., 1.5B, 3B, 7B, 14B parameters), allowing you to choose the perfect balance between performance and resource requirements for your specific needs.

Pricing: Power for a Price You Can’t Beat

This is the best part. RWKV-7 is COMPLETELY FREE. As a fully open-source project, there are no subscription fees, no API call charges, and no hidden costs. The only investment is the computational hardware required to run it, and thanks to its incredible efficiency, you can run powerful RWKV models on consumer-grade GPUs that would struggle with comparable Transformer-based models. This breaks down the financial barriers to entry, putting state-of-the-art AI into the hands of developers, researchers, and hobbyists worldwide.

Who is RWKV-7 For?

- Indie Developers & Hobbyists: Build powerful AI applications on your personal computer without breaking the bank on cloud services.

- Startups & SMEs: Create and deploy custom, self-hosted AI solutions that ensure data privacy and avoid expensive, recurring API costs.

- AI Researchers & Academics: Explore a novel LLM architecture that challenges the Transformer-dominated landscape and opens new avenues for research.

- Privacy-Conscious Users: Run a capable LLM entirely offline on your local machine, ensuring your sensitive data never leaves your control.

Alternatives & How RWKV Compares

While the AI space is crowded, RWKV carves out a unique niche based on its architectural superiority.

RWKV vs. Llama/Mistral

Llama and Mistral are fantastic open-source Transformer models. However, they are still bound by the quadratic complexity of attention. RWKV shines in scenarios requiring very long context and low-latency inference, offering a more efficient and scalable alternative, especially on constrained hardware.

RWKV vs. GPT-4/Claude

Models like GPT-4 are closed-source and accessed via API. RWKV is the antithesis: it’s open, transparent, and self-hostable. It empowers you with full control over the model and your data, freeing you from reliance on a third-party provider and their pricing structure.

RWKV vs. Mamba

Mamba is another innovative architecture challenging Transformers. Both RWKV and Mamba represent the cutting edge of efficient LLMs. RWKV’s hybrid RNN-Transformer approach is a unique and proven design that has a vibrant, established community and a wide range of available model sizes.

data statistics

Relevant Navigation

OLMo 2 (AI2)

Phi-4 Reasoning

KoboldAI

Jan (Desktop)

Vosk

H2O GPT

NVIDIA NeMo ASR