NVIDIA NeMo: A Deep Dive into the Open-Source Conversational AI Toolkit

In the rapidly evolving world of artificial intelligence, having powerful and flexible tools is paramount. Enter NVIDIA NeMo, a cutting-edge, open-source toolkit developed by the tech giant NVIDIA. Designed to empower developers and researchers, NeMo simplifies the creation of high-performance conversational AI models. It’s not just another tool; it’s a comprehensive framework built on PyTorch that streamlines the entire workflow for automatic speech recognition (ASR), natural language processing (NLP), and text-to-speech (TTS) synthesis. Whether you’re building a sophisticated virtual assistant or a real-time transcription service, NeMo provides the building blocks for state-of-the-art performance.

Core Capabilities: More Than Just Speech Recognition

While often highlighted for its ASR prowess, NeMo is a versatile toolkit covering the full spectrum of conversational AI. It focuses on the following domains:

- Automatic Speech Recognition (ASR): This is NeMo’s flagship capability. It allows you to build models that convert spoken language into written text with incredible accuracy. These models can be fine-tuned for specific domains, accents, and noisy environments.

- Natural Language Processing (NLP): Beyond just hearing, NeMo helps machines understand language. Its NLP modules cover tasks like question answering, punctuation, named entity recognition, and text classification, providing the “brains” for any conversational agent.

- Text-to-Speech (TTS) Synthesis: NeMo completes the conversational loop by enabling you to generate natural, human-like speech from text. This is essential for creating voice responses in applications like virtual assistants and navigation systems.

Please note: NVIDIA NeMo is specialized for conversational AI and does not include capabilities for image or video generation.

Key Features That Make NeMo Stand Out

What sets NeMo apart from the crowd? It’s all in the details and the developer-first approach.

- State-of-the-Art Pre-trained Models: Get a massive head start with a rich collection of pre-trained models from NVIDIA. These models, trained on vast datasets, offer top-tier performance out-of-the-box and can be easily fine-tuned for your specific needs.

- Highly Modular and Composable: NeMo’s architecture is designed like a set of building blocks. You can easily mix and match encoders, decoders, and language models to experiment and create custom model architectures without reinventing the wheel.

- Built on PyTorch: By leveraging the power and flexibility of PyTorch, NeMo integrates seamlessly into the modern deep learning ecosystem, making it familiar to a wide range of developers and researchers.

- Scalability for Enterprise Use: NVIDIA knows a thing or two about scale. NeMo is optimized for training on multi-GPU and multi-node systems, allowing you to tackle massive datasets and build truly large-scale models.

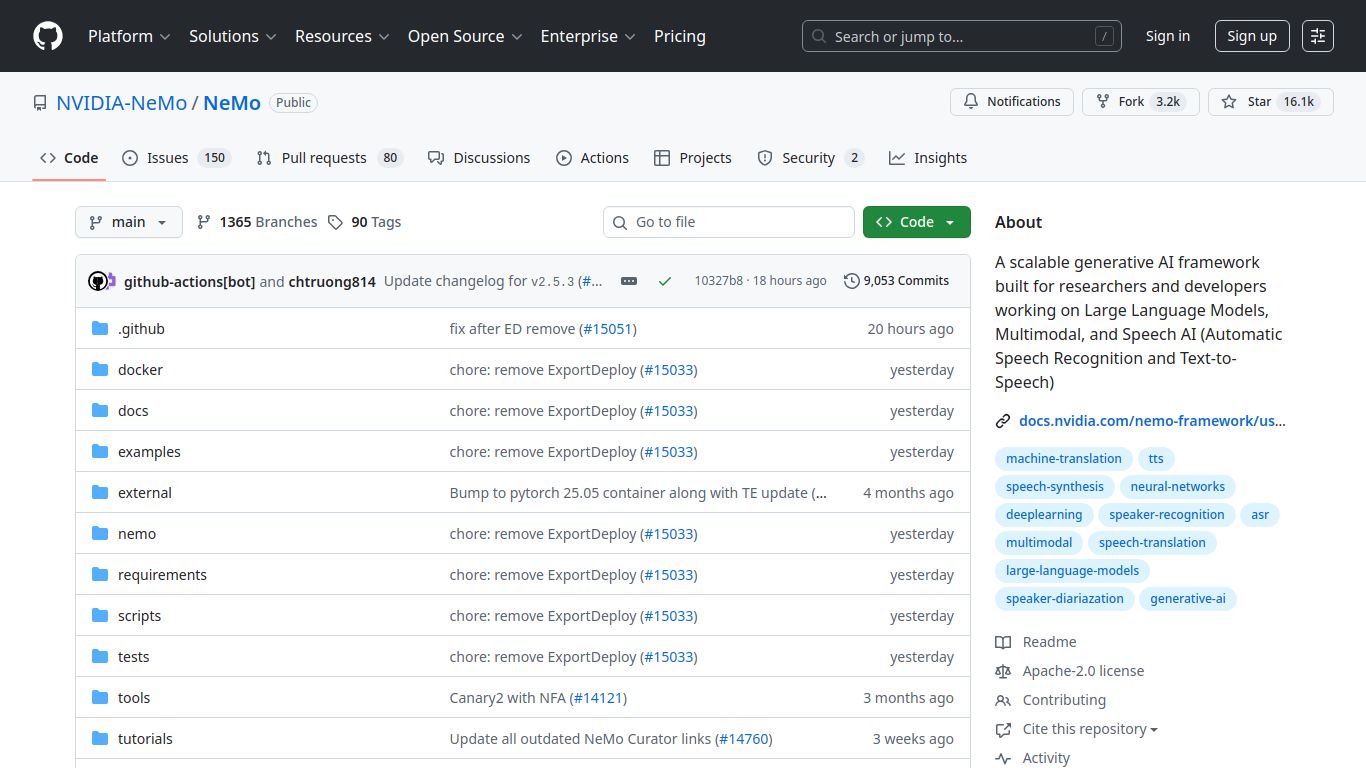

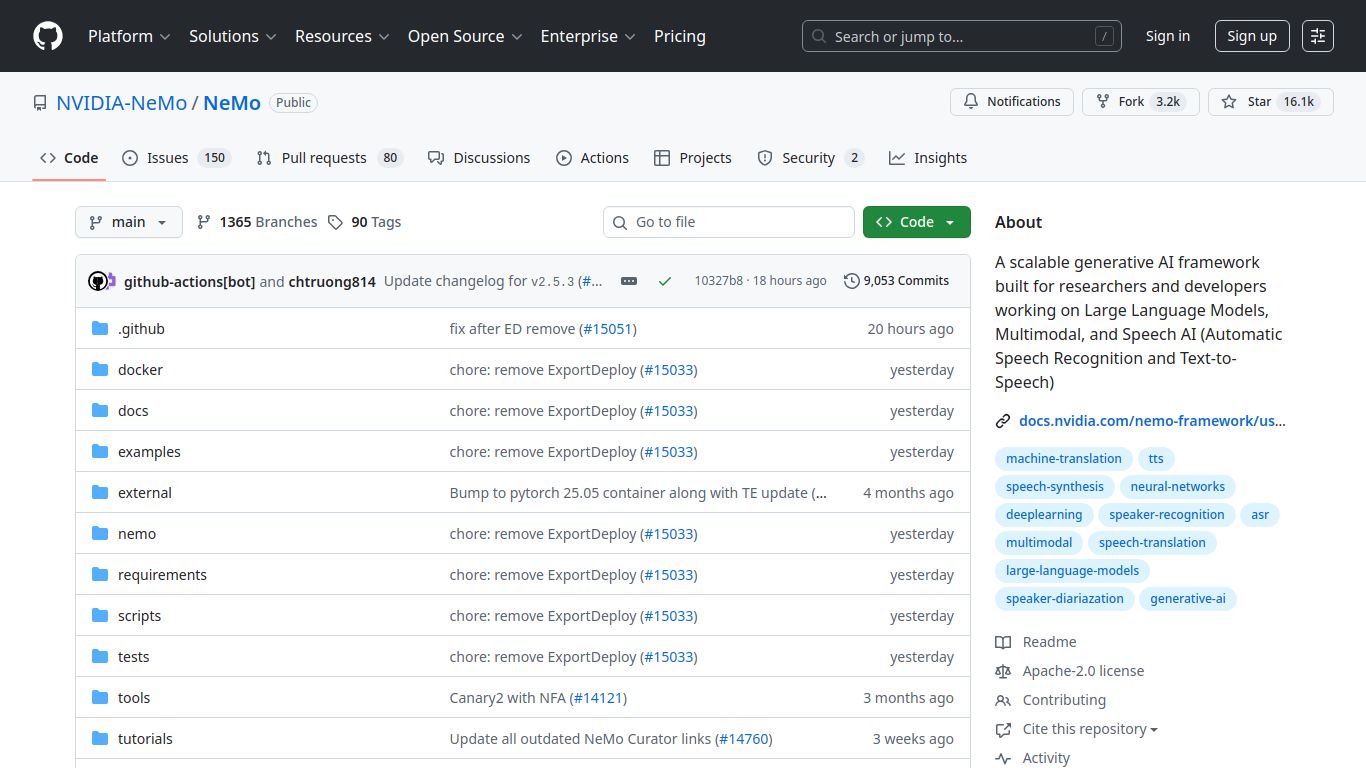

- Open-Source and Community-Driven: Available on GitHub, NeMo benefits from a vibrant community of contributors. This means constant updates, a wealth of shared knowledge, and the freedom to inspect and modify the code to your heart’s content.

Pricing: Is It Really Free?

Yes, it is! NVIDIA NeMo is an open-source toolkit released under the permissive Apache 2.0 license. This means you can download, use, modify, and distribute it for both commercial and personal projects completely free of charge. There are no subscription fees or hidden costs for the software itself. However, it’s important to remember the operational costs. Training and deploying high-performance AI models requires significant computational resources, typically powerful NVIDIA GPUs. So, while the software is free, you’ll need to account for the cost of your hardware or cloud computing services (like AWS, GCP, or Azure) to run it effectively.

Who Should Use NVIDIA NeMo?

NVIDIA NeMo is a powerful framework tailored for a technical audience. It’s the perfect match for:

- AI Researchers: Academics and R&D professionals who need a flexible platform to experiment with novel architectures and push the boundaries of conversational AI.

- Machine Learning Engineers: Developers tasked with building, training, and deploying production-grade ASR, NLP, or TTS systems. The scalability and pre-trained models significantly accelerate development cycles.

- Data Scientists: Practitioners who want to fine-tune state-of-the-art models on custom datasets for specific business applications, such as industry-specific transcription or sentiment analysis.

- Enterprise Teams: Companies looking to build in-house conversational AI solutions that offer maximum control, customization, and data privacy, avoiding reliance on third-party APIs.

NVIDIA NeMo vs. The Competition

How does NeMo stack up against other popular speech and language tools? Here’s a quick comparison:

- vs. OpenAI Whisper: Both NeMo and Whisper are powerful, open-source ASR models. Whisper is renowned for its exceptional out-of-the-box accuracy across many languages, making it incredibly simple to use for general transcription. NeMo, on the other hand, offers a more comprehensive toolkit approach, providing greater flexibility for training from scratch, fine-tuning for specific domains, and integrating NLP and TTS components into a complete pipeline.

- vs. Google Cloud Speech-to-Text & Amazon Transcribe: These are fully managed, cloud-based API services. They are incredibly easy to integrate and require no infrastructure management, making them ideal for developers who want a quick “plug-and-play” solution. The downside is cost (pay-per-use), less control over the underlying models, and potential data privacy concerns. NeMo provides the ultimate control and customizability for those willing to manage their own infrastructure.

In summary, if you need maximum control, top-tier performance, and a comprehensive, open-source toolkit to build custom conversational AI solutions, NVIDIA NeMo is an unparalleled choice. You can explore its full potential on its official GitHub repository.

data statistics

Relevant Navigation

Open Deep Research (OSS)

Google Cloud Speech-to-Text

OpenAI Whisper

deepset Haystack

Label Studio (OSS)

RWKV-7 (family)

Cline (Open Source)