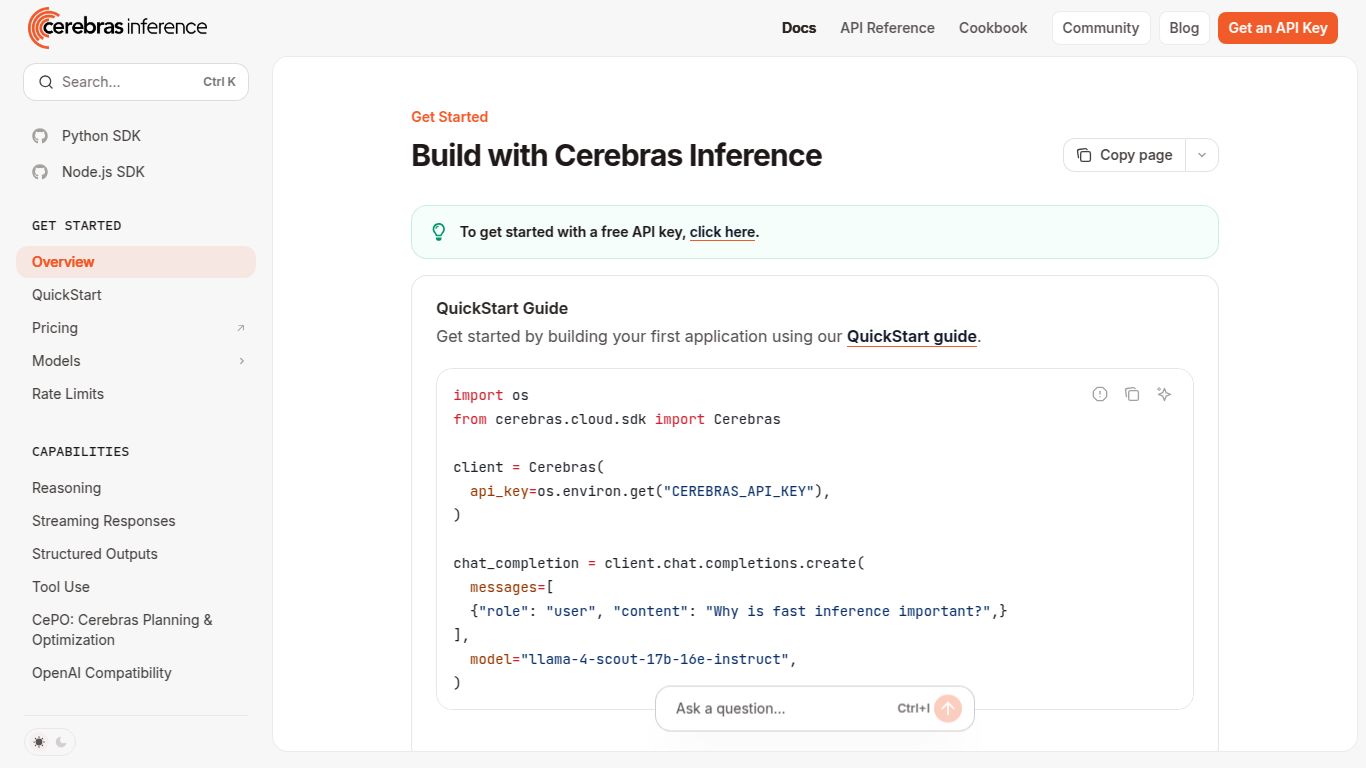

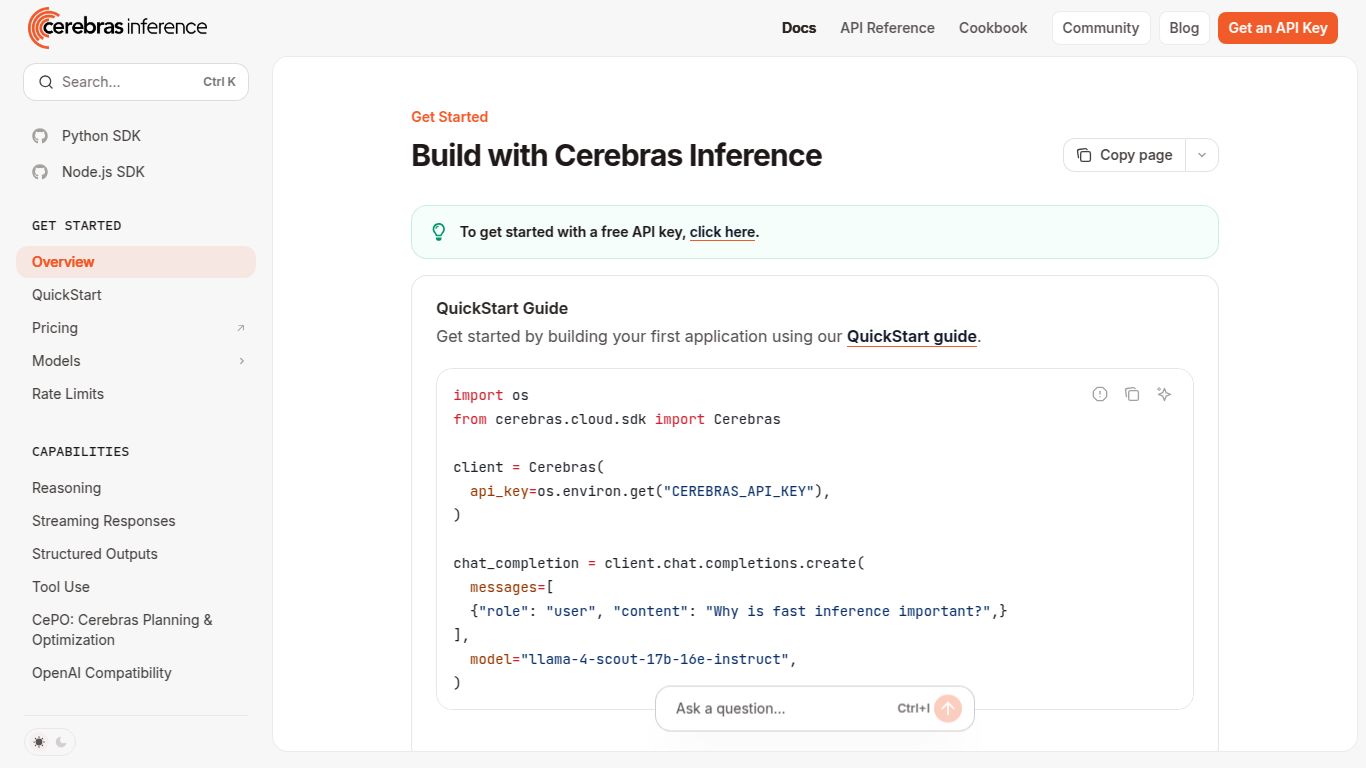

Cerebras Inference: Blazing-Fast LLM APIs on World-Class Hardware

Welcome to the future of AI application development. Cerebras Inference, developed by the pioneering hardware company Cerebras Systems, offers a serverless API platform designed to run large language models (LLMs) with unparalleled speed and efficiency. Instead of wrestling with complex infrastructure, you can now tap directly into the power of Cerebras’s legendary wafer-scale hardware through a simple, developer-friendly interface. This service is all about providing raw, high-throughput performance for demanding AI tasks, making it a go-to solution for developers and businesses looking to scale their AI-powered features without the operational overhead.

Capabilities

Cerebras Inference is laser-focused on one thing and does it exceptionally well: Text-Based AI Generation. It is not a tool for generating images, video, or audio. Instead, it provides the powerful engine needed for a vast array of language-related tasks. By hosting a curated selection of top-tier open-source LLMs, it empowers you to build applications for:

- Advanced Chatbots and Conversational AI: Create fluid, intelligent, and responsive virtual assistants.

- Content Creation and Summarization: Generate articles, marketing copy, or instantly summarize long documents.

- Code Generation and Assistance: Build tools that help developers write, debug, and understand code more efficiently.

- Data Analysis and Extraction: Process unstructured text to pull out key information and insights.

- Research and Development: Experiment with large-scale language models without needing your own supercomputer.

Features

What makes Cerebras Inference stand out in a crowded market? It all comes down to its unique architecture and philosophy.

- Powered by CS-2 Supercomputers: Your API calls are processed on Cerebras’s specialized AI hardware, offering incredibly low latency and high throughput that commodity GPUs can’t match.

- Truly Serverless Experience: Forget about managing servers, scaling clusters, or patching systems. Cerebras handles all the infrastructure, so you can focus solely on your application code.

- Pay-As-You-Go Simplicity: The pricing model is transparent and straightforward. You only pay for the tokens you process, making it incredibly cost-effective for both small experiments and large-scale production workloads.

- Open-Source Model Freedom: Gain access to a growing library of best-in-class open models like Llama 2, Mixtral, and BTLM. This gives you the flexibility to choose the right model for your task without being locked into a proprietary ecosystem.

- Effortless Integration: With a clean and simple REST API, integrating Cerebras Inference into your existing applications is a breeze. It’s designed to be instantly familiar to any developer.

Pricing

Cerebras Inference shatters the subscription-based model with a refreshingly simple pay-as-you-go pricing structure. There are no monthly commitments, no platform fees, and no hidden charges. You are billed based on the number of input (prompt) and output (generated) tokens your application uses. This makes it easy to predict costs and scale affordably.

Example Model Pricing (per 1 million tokens):

- Llama-2-7b-chat: $0.10 (Input) / $0.50 (Output)

- Llama-2-13b-chat: $0.20 (Input) / $1.00 (Output)

- Llama-2-70b-chat: $0.70 (Input) / $2.80 (Output)

- Mixtral-8x7B-Instruct: $0.30 (Input) / $1.20 (Output)

Note: Prices are subject to change. Please refer to the official Cerebras Inference documentation for the most current rates.

Applicable Personnel

This powerful platform is built for those who build. It’s not a consumer-facing application but a foundational tool for a range of technical professionals.

- AI and Machine Learning Engineers: The primary audience. Professionals who are building, deploying, and scaling AI applications.

- Software Developers: Any developer looking to integrate sophisticated language features into their web or mobile applications without becoming an AI expert.

- Startup Founders & CTOs: Leaders who need a scalable, cost-effective way to build an AI-powered MVP or enhance an existing product.

- Data Scientists and Researchers: Academics and professionals who need to run experiments on large models without the prohibitive cost and complexity of managing hardware.

- Enterprise IT Teams: Corporate teams looking for a reliable, high-performance inference solution to power internal tools or customer-facing services.

Alternatives & Comparison

Cerebras Inference operates in a competitive space, but its unique hardware gives it a distinct edge.

Cerebras Inference vs. OpenAI API

While OpenAI offers powerful proprietary models like GPT-4, Cerebras focuses on providing top performance for open-source models. This gives developers more transparency, control, and freedom from vendor lock-in. If your priority is customizability and leveraging the open-source community, Cerebras is a compelling choice.

Cerebras Inference vs. Cloud Giants (AWS SageMaker, Google Vertex AI)

Major cloud providers offer comprehensive MLOps platforms, but they can be notoriously complex and expensive to configure and manage. Cerebras offers a far simpler, developer-first serverless experience. It abstracts away all the complexity, delivering pure performance through a straightforward API.

Cerebras Inference vs. Other Inference Platforms (e.g., Replicate, Anyscale)

Many platforms offer model hosting on commodity GPU hardware. Cerebras’s key differentiator is its custom-built wafer-scale hardware. This allows it to offer potentially superior performance, especially for handling very long sequences or achieving the lowest possible latency, making it ideal for real-time applications.

data statistics

Relevant Navigation

Together AI Model Library

Google Cloud Text-to-Speech

remove.bg — Automatic Background Removal

KoboldCpp

Tavily

Tavus — Personalized AI Humans

OpenAI Moderation API (omni-moderation-latest)