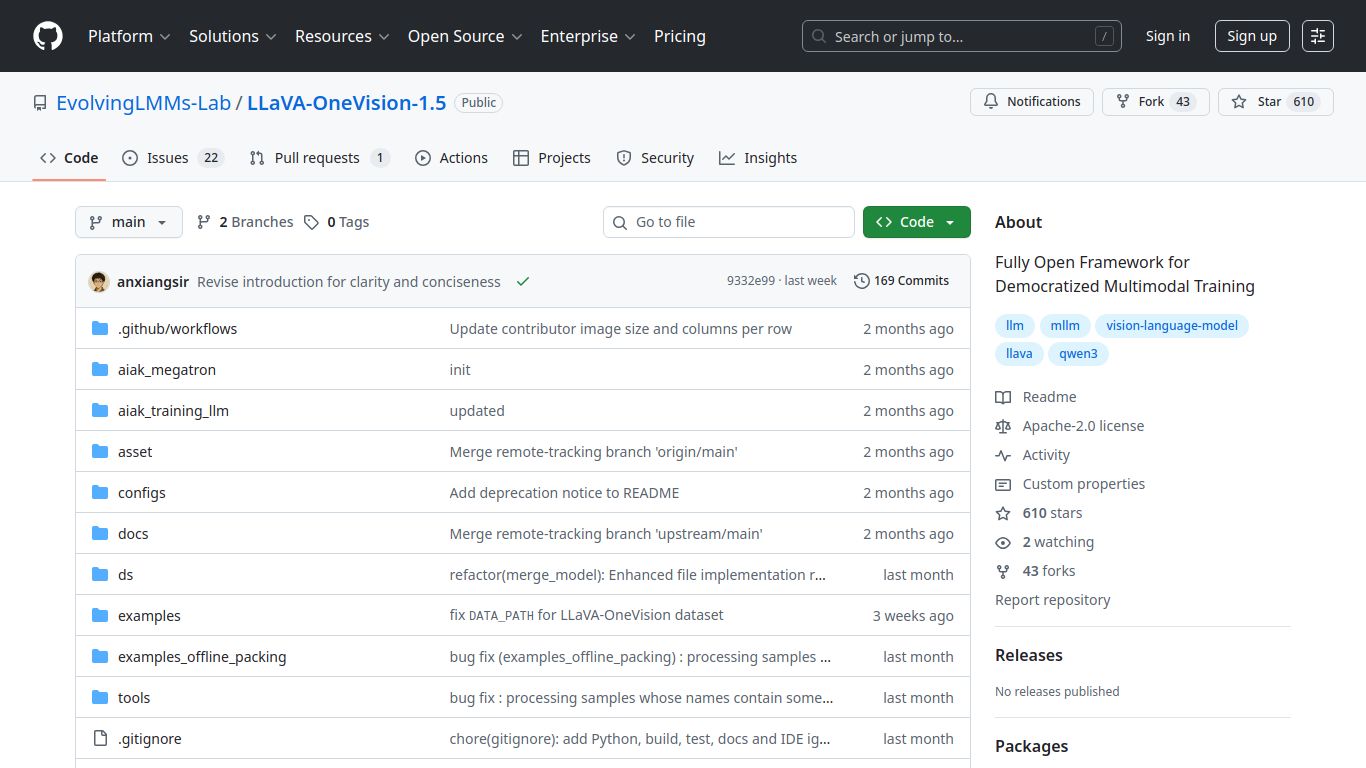

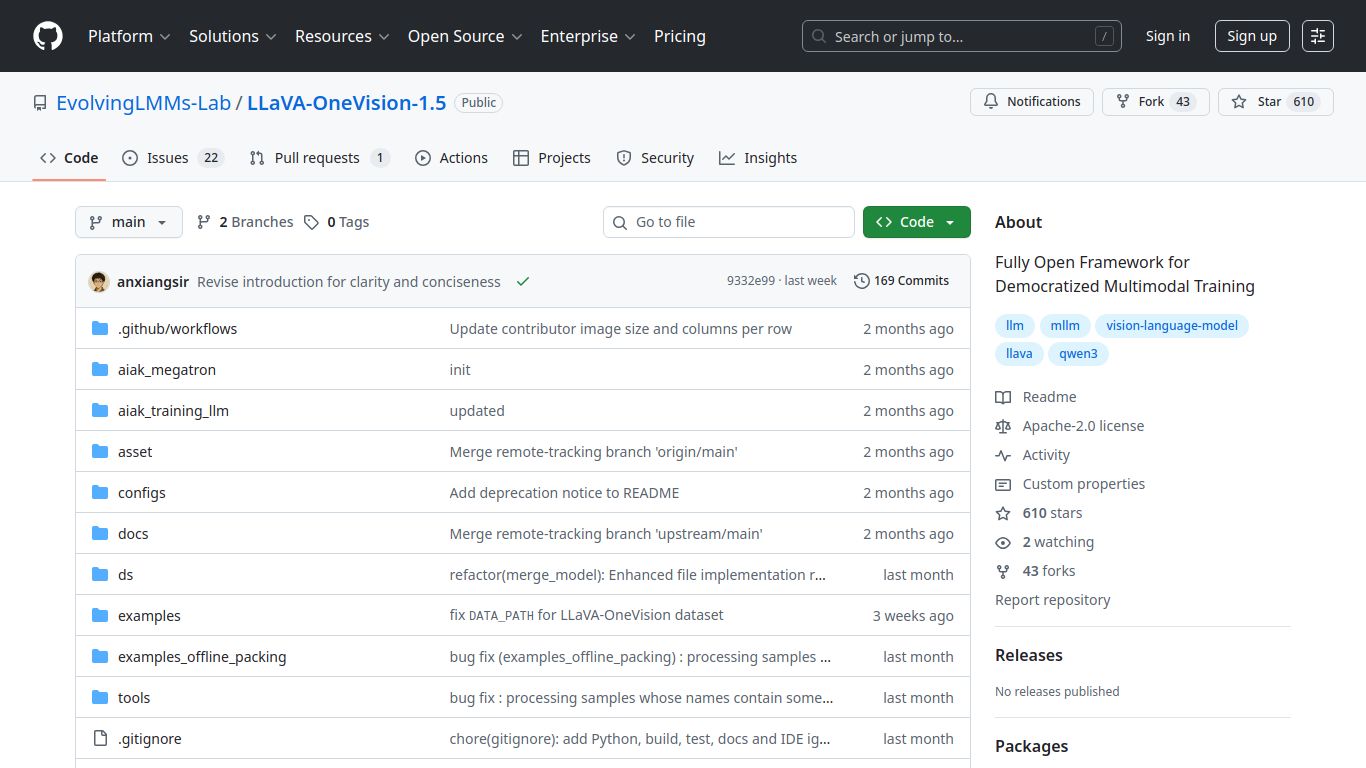

LLaVA-OneVision 1.5: The Open-Source AI That Sees and Understands Your World

Ever wished your AI could not just read text, but also see and understand images with human-like intuition? Step into the future with LLaVA-OneVision 1.5, a groundbreaking open-source Large Multimodal Model (LMM) developed by the brilliant minds at EvolvingLMMs-Lab. This isn’t just another chatbot; it’s a powerful vision-language model designed to bridge the gap between visual perception and complex language reasoning. Imagine having an intelligent conversation with an AI about a photo, asking it to analyze details, explain scenarios, or even read text embedded within the image. LLaVA-OneVision 1.5 makes this a reality, offering a glimpse into the next generation of interactive AI.

What Can LLaVA-OneVision 1.5 Do?

LLaVA-OneVision 1.5 is a master of multimodal understanding. While it doesn’t generate images or videos, its ability to analyze and interpret them is second to none. It acts as a set of incredibly intelligent eyes for your projects.

- Advanced Visual Question Answering (VQA): Go beyond simple “what is this?” questions. Ask complex, multi-layered questions about the contents of an image, the relationships between objects, and the context of the scene, and receive startlingly accurate and detailed answers.

- Rich Image Description and Captioning: Automatically generate vivid, narrative descriptions for your images. This is perfect for accessibility applications, content cataloging, or simply understanding a picture at a deeper level.

- Optical Character Recognition (OCR) Reimagined: The model can effortlessly read and interpret text from any image, whether it’s on a street sign, a document, or a product label, and then reason about that text in context.

- Robust Text and Code Comprehension: At its core, LLaVA-OneVision 1.5 is built upon a powerful Large Language Model, meaning it retains all the capabilities you’d expect for text summarization, translation, code generation, and more.

Core Features That Set It Apart

This isn’t just a powerful model; it’s built on a foundation of innovation and accessibility that makes it a standout choice for developers and researchers.

- Completely Open-Source: This is the ultimate feature. Enjoy complete freedom to download, modify, and deploy the model for your own commercial or research projects without any licensing fees or restrictions. Your data stays yours.

- State-of-the-Art Performance: LLaVA-OneVision 1.5 consistently achieves top-tier results on major academic benchmarks, often outperforming proprietary models in specific visual reasoning tasks. You’re getting elite performance without the enterprise price tag.

- Cost-Efficient Architecture: Thanks to its innovative one-stage training method, the model achieves its incredible performance with less computational power and data than many of its predecessors. This makes cutting-edge AI more accessible to everyone.

- Deep Multimodal Reasoning: The model doesn’t just identify objects; it understands them. It can infer intent, recognize humor, and grasp abstract concepts within a visual scene, providing a truly intelligent analysis.

Pricing: Freedom is Priceless

Free (Open-Source)

That’s right, LLaVA-OneVision 1.5 is completely free to use. As an open-source project hosted on GitHub, there are no subscriptions, no API fees, and no hidden costs. The only investment required is the computational hardware (like GPUs) needed to run or fine-tune the model yourself, giving you full control over your expenses and infrastructure.

Who is LLaVA-OneVision 1.5 For?

This powerful tool is designed for builders, innovators, and thinkers who are working on the cutting edge of artificial intelligence.

- AI Researchers & Academics: Ideal for those pushing the boundaries of what’s possible in multimodal AI and vision-language studies.

- Machine Learning Engineers: The perfect foundation for integrating sophisticated visual understanding into real-world applications.

- Software Developers: A dream come true for building next-gen apps, from advanced accessibility tools for the visually impaired to intelligent inventory management systems.

- Data Scientists: Unlock new insights by analyzing vast datasets of images and text in tandem.

- AI Hobbyists & Enthusiasts: An incredible opportunity to experiment with and learn from a state-of-the-art, open-source model.

LLaVA-OneVision 1.5 vs. The Competition

In a world of powerful AI models, LLaVA-OneVision 1.5 carves out a unique and compelling niche. When compared to other open-source alternatives like InstructBLIP or MiniGPT-4, it often stands out for its superior performance on reasoning benchmarks and its more efficient training pipeline. But the real distinction comes when you compare it to commercial giants like OpenAI’s GPT-4V or Google’s Gemini. While these closed-source models offer convenient API access, they come with significant usage costs, data privacy concerns, and a lack of customizability. LLaVA-OneVision 1.5 offers a powerful alternative: full ownership, endless customizability, and zero API costs. For projects that demand control, privacy, and cost-effectiveness without compromising on performance, LLaVA-OneVision 1.5 is the undisputed champion.

data statistics

Relevant Navigation

DeepSeek-R1 (and distilled checkpoints)

LM Studio (Desktop)

Text Generation WebUI (oobabooga)

SmolLM2

CVAT

NVIDIA NeMo ASR

LobeChat